Google has announced a wide range of upgrades for its Gemini assistant today. To start, the new Gemini 2.0 Flash Thinking Experimental model now allows file upload as an input, alongside getting a speed boost.

The more notable update, however, is a new opt-in feature called Personalization. In a nutshell, when you put a query before Gemini, it takes a peek at your Google Search history and offers a tailored response. Down the road, Personalization will expand beyond Search. Google says Gemini will also tap into other ecosystem apps such as Photos and YouTube to offer more personalized responses. It’s somewhat like Apple’s delayed AI features for Siri, which even prompted the company to pull its ads.

In the AI chatbot wars, Google thinks the key to retaining users is serving up content they can’t get elsewhere, like answers shaped by their internet habits.

On Thursday, the company announced Gemini with personalization, a new “experimental capability” for its Gemini chatbot apps that lets Gemini draw on other Google apps and services to deliver customized responses. Gemini with personalization can tap a user’s activities and preferences across Google’s product ecosystem to deliver tailored answers to queries, according to Gemini product director Dave Citron.

These updates are all designed to make Gemini feel less like a tool and more like a natural extension of you, anticipating your needs with truly personalized assistance,” Citron wrote in a blog post provided to The Tech Spot. “Early testers have found Gemini with personalization helpful for brainstorming and getting personalized recommendations.”

Gemini with personalization, which will integrate with Google Search before expanding to additional Google services like Google Photos and YouTube in the months to come, arrives as chatbot makers including OpenAI attempt to differentiate their virtual assistants with unique and compelling functionality. OpenAI recently rolled out the ability for ChatGPT on macOS to directly edit code in supported apps, while Amazon is preparing to launch an “agentic” reimagining of Alexa.

Citron said Gemini with personalization is powered by Google’s experimental Gemini 2.0 Flash Thinking Experimental AI model, a so-called “reasoning” model that can determine whether personal data from a Google service, like a user’s Search history, is likely to “enhance” an answer. Narrow questions informed by likes and dislikes, like “Where should I go on vacation this summer?” and “What would you suggest I learn as a new hobby?,” will benefit the most, Citron continued.

“For example, you can ask Gemini for restaurant recommendations and it will reference your recent food-related searches,” he said, “or ask for travel advice and Gemini will respond based on destinations you’ve previously searched.”

If this all sounds like a privacy nightmare, well, it could be. It’s not tough to imagine a scenario in which Gemini inadvertently airs someone’s sensitive info.

That’s probably why Google is making Gemini with personalization opt-in — and excluding users under the age of 18. Gemini will ask for permission before connecting to Google Search history and other apps, Citron said, and show which data sources were used to customize the bot’s responses.

“When you’re using the personalization experiment, Gemini displays a clear banner with a link to easily disconnect your Search history,” Citron said. “Gemini will only access your Search history when you’ve selected Gemini with personalization, when you’ve given Gemini permission to connect to your Search history, and when you have Web & App Activity on.”

Gemini with personalization will roll out to Gemini users on the web (except for Google Workspace and Google for Education customers) starting Thursday in the app’s model drop-down menu and “gradually” come to mobile after that. It’ll be available in over 40 languages in “the majority” of countries, Citron said, excluding the European Economic Area, Switzerland, and the U.K.

Citron indicated that the feature may not be free forever.

Future usage limits may apply,” he wrote in the blog post. “We’ll continue to gather user feedback on the most useful applications of this capability.”

New models

As added incentives to stick with Gemini, Google announced updated models, research capabilities, and app connectors for the platform.

Subscribers to Gemini Advanced, Google’s $20-per-month premium subscription, can now use a standalone version of 2.0 Flash Thinking Experimental that supports file attachments; integrations with apps like Google Calendar, Notes, and Tasks; and a 1-million-token context window. “Context window” refers to text that the model can consider at any given time — 1 million tokens is equivalent to around 750,000 words.

Google said that this latest version of 2.0 Flash Thinking Experimental is faster and more efficient than the model it is replacing, and can better handle prompts that involve multiple apps, like “Look up an easy cookie recipe on YouTube, add the ingredients to my shopping list, and find me grocery stores that are still open nearby.

Integrating Gemini within more apps

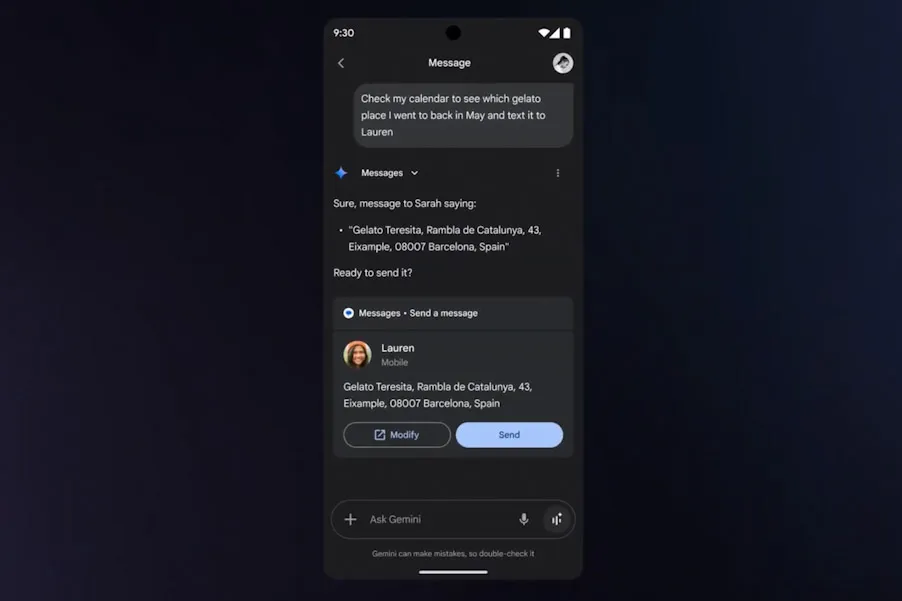

Gemini has the ability to interact with other applications — Google’s as well as third-party — using an “apps” system, previously known as extensions. It’s a neat convenience, as it allows users to get work done across different apps without even launching them.

Google is now bringing access to these apps within the Gemini 2.0 Flash Thinking Experimental model. Moroever, the pool of apps is being expanded to Google Photos and Notes, as well. Gemini already has access to YouTube, Maps, Google Flights, Google Hotels, Keep, Drive, Docs, Calendar, and Gmail.

Users can also enable the apps system for third-party services such as WhatsApp and Spotify, as well, by linking with their Google account. Aside from pulling information and getting tasks done across different apps, it also lets users execute multiple-step workflows.

For example, with a single voice command, users can ask Gemini to look up a recipe on YouTube, add the ingredients to their notes, and find a nearby grocery shop, as well. In a few weeks, Google Photos will also be added to the list of apps that Gemini can access.

Perhaps in response to pressure from OpenAI and its newly launched tools for in-depth research, Google is also enhancing Deep Research, its Gemini feature that searches across the web to compile reports on a subject. Deep Research now exposes its “thinking” steps and uses 2.0 Flash Thinking Experimental as the default model, which should result in “higher-quality” reports that are more “detailed” and “insightful,” Google said.

Deep Research is now free to try for all Gemini users, and Google has increased usage limits for Gemini Advanced customers.

Free Gemini users are also getting Gems, Google’s topic-focused customizable chatbots within Gemini, which previously required a Gemini Advanced subscription. And in the coming weeks, all Gemini users will be able to interact with Google Photos to, for example, look up photos from a recent trip, Google said.

Moreover, Google is also expanding the context window limit to 1 million tokens for the Gemini 2.0 Flash Thinking Experimental model. AI tools such as Gemini break down words into tokens, with an average English language word translating to roughly 1.3 tokens.

The larger the token context window, the bigger the size of input allowed. With the increased context window, Gemini 2.0 Flash Thinking Experimental can now process much bigger chunks of information and solve complex problems.