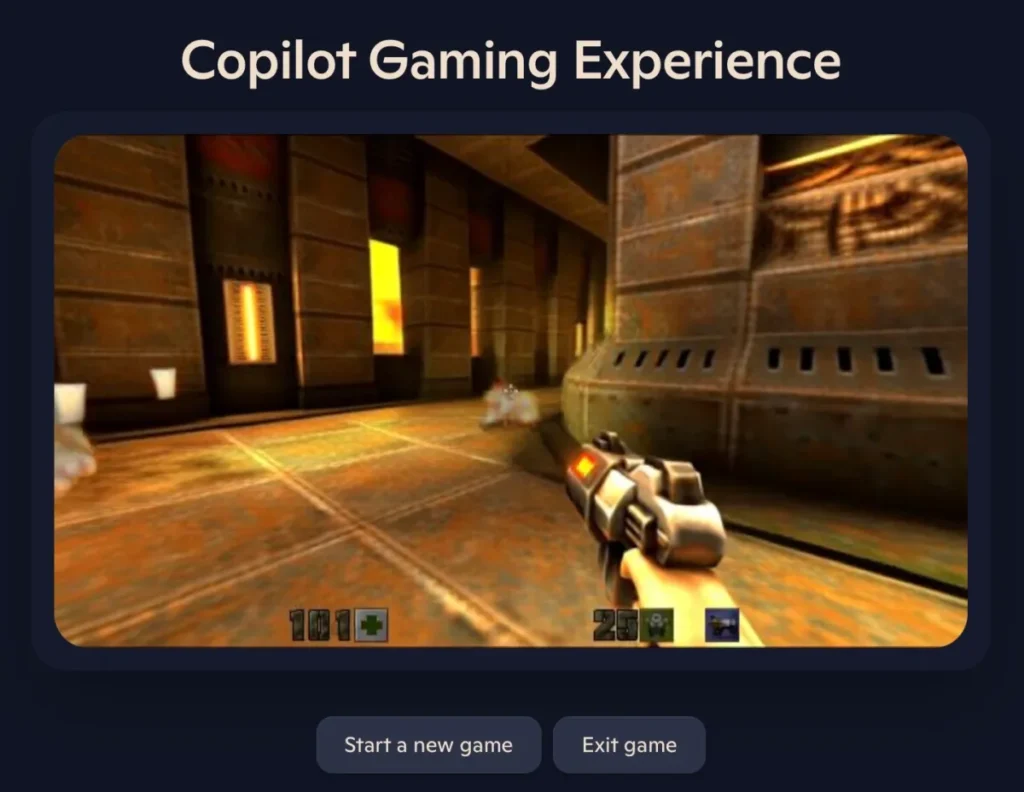

Microsoft has released a browser-based, playable level of the classic video game Quake II. This functions as a tech demo for the gaming capabilities of Microsoft’s Copilot AI platform — though by the company’s own admission, the experience isn’t quite the same as playing a well-made game.

You can try it out for yourself, using your keyboard to navigate a single level of Quake II for a couple minutes before you hit the time limit.

In a blog post describing their work, Microsoft researchers said their Muse family of AI models for video games allows users to “interact with the model through keyboard/controller actions and see the effects of your actions immediately, essentially allowing you to play inside the model.

An overview of the WHAM setup is shown in Figure 1. On the left, we utilise a Vit-VQGAN [3] to tokenise the image. On the right, we model that new sequence of tokens utilising a decoder-only transformer. Much like an LLM, it is trained to predict the next token in the sequence. Please refer to our paper (opens in new tab) [1] for more details.

An overview of the WHAMM architecture is shown in Figure 2. Shown on the left, exactly like WHAM, we first tokenise the image. For this specific setting each 640×360 image is turned into 576 tokens (for WHAM each 300×180 was turned into 540 tokens). Since WHAM generates 1 token at a time, generating the 540 tokens necessary to turn into an image can take a long time. In contrast, a MaskGIT-style setup can generate all of the tokens for an image in as few forward passes as we want. This enables us to generate the image tokens fast enough to facilitate a real-time experience. Typically, in a MaskGIT setup you would start with all of the tokens for an image masked and then produce predictions for each and every one of them. We can think of this as producing a rough and ready first pass for the image. We would then re-mask some of those tokens, predict them again, re-mask, and so on. This iterative procedure allows us to gradually refine our image prediction. However, since we have tight constraints on the time we can take to produce an image, we are very limited in how many passes we can do through a big transformer.

Quake II WHAMM

The fun part is then being able to play a simulated version of the game inside the model. After the release of WHAM-1.6B only 6 short weeks ago, we immediately launched into this project on training WHAMM for a new game. This entailed both gathering the data from scratch, refining our earlier WHAMM prototypes, and then the actual training of both the image encoder/decoder and the WHAMM models.

A concerted effort by the team resulted in both planning out what data to collect (what game, how should the testers play said game, what kind of behaviours might we need to train a world model, etc), and the actual collection, preparation, and cleaning of the data required for model training.

Much to our initial delight we were able to play inside the world that the model was simulating. We could wander around, move the camera, jump, crouch, shoot, and even blow-up barrels similar to the original game. Additionally, since it features in our data, we can also discover some of the secrets hidden in this level of Quake II.

To show off these capabilities, the researchers trained their model on a Quake II level (which Microsoft owns through its acquisition of ZeniMax. Much to our initial delight we were able to play inside the world that the model was simulating,” they wrote. “We could wander around, move the camera, jump, crouch, shoot, and even blow-up barrels similar to the original game.”

At the same time, the researchers emphasized that this is meant to be “a research exploration” and should be thought of as “playing the model as opposed to playing the game.”

More specifically, they acknowledged “limitations and shortcomings,” like the fact that enemies are fuzzy, the damage and health counters can be inaccurate, and most strikingly, the model struggles with object permanence, frequently forgetting about things that are out of view for 0.9 seconds or longer.

In the researchers’ view, this can “also be a source of fun, whereby you can defeat or spawn enemies by looking at the floor for a second and then looking back up,” or even “teleport around the map by looking up at the sky and then back down.

Writer and game designer Austin Walker was less impressed by this approach, posting a gameplay video in which he spent most of his time trapped in a dark room. This also happened to me both times I tried to play the demo, though I’ll admit I’m extremely bad at first-person shooters.

Referring to Microsoft Gaming CEO Phil Spencer’s recent statement that AI models could help with game preservation by making classic games “portable to any platform,” Walker argued this reveals “a fundamental misunderstanding of not only this tech but how games WORK. The internal workings of games like Quake — code, design, 3d art, audio — produce specific cases of play, including surprising edge cases,” Walker wrote.

That is a big part of what makes games good. If you aren’t actually able to rebuild the key inner workings, then you lose access to those unpredictable edge cases.